ADAM is About Advanced Design and

Manufacturing and Cyberspace Product and Service Reviews in our

Virtual Exhibition Hall, Including the Latest News, the Most

Innovative International Products & Services, New Strategies,

R&D, and more...

ADAM: R&D

Article

Ashton, P. and Ranky,

P.G.: An Advanced Concurrent

Engineering Research Toolset and its Applications at Rolls-Royce

Motor Cars

Published by ADAM at

http://www.cimwareukandusa.com, © Copyright by CIMware Ltd. UK

and CIMware USA, Inc.

Please feel free to download this paper with

its full contents,

FREE

of charge, but always mention the website:

http://www.cimwareukandusa.com and the author(s) as the

source!

Website:

http://www.cimwareukandusa.com

Email: cimware@cimwareukandusa.com

Go to Welcome Page

Go to ADAM

Addresses: Philip T. Ashton, PhD,

Manager, Rolls-Royce Motor Cars Limited, Crewe Cheshire, United

Kingdom. (Please note, that Dr. Ashton can be contacted via ADAM:

cimware@cimwareukandusa.com)

Paul G. Ranky, Dr. Techn/PhD, Research

Professor, Department of Industrial and Manufacturing Engineering,

New Jersey Institute of Technology, University Heights, Newark,

NJ07450, USA, Email: ranky@admin.njit.edu

Contents

Abstract

Go to Top

The prime objective of our research and development project was to

construct, validate and apply an integrated, concurrent engineering

research methods toolset that aids, promotes and facilitates

Rolls-Royce Motor Cars' desire to move from sequentially based

current product model development to a parallel approach that

integrates with the company's new logistic infrastructure.

Parallel, or concurrent, or simultaneous engineering (CE/SE),

meaning exactly the same, represents a new opportunity to integrate

design, manufacturing, assembly, quality control, shop floor

automation, marketing and other processes in order to cut lead time

and to cuts waste in the logistic chain. CE/SE is a new approach to

product development. It focuses on parallel versus sequential

interaction among various product life cycle concerns.

It is a systematic approach to the integrated, concurrent design

of products and their related processes, including manufacture and

support. This approach is intended to cause developers, from the

outset, to consider all elements of the product life cycle from

conception through disposal, including quality, cost schedule and

user requirements.

The purpose of the research and development, validation and

application of an advanced concurrent engineering research toolset at

Rolls Royce Motor Cars Limited is to:

- Shorten lead time,

- Increase productivity and

- In integration with the new logistic system support product

research and development that is very high quality, reliable, less

expensive then earlier versions and reflect the customers'

requirements in a very competitive world market.

This case study oriented research paper, is the first of its kind

in a series of papers to be published, discusses some of the

strategic issues, as well as gives examples of the research toolset

and some of its applications at Rolls-Royce Motor Cars Limited.

Keywords

Go to Top

Concurrent, or Simultaneous, or Parallel Engineering Methods,

Automobile Design and Manufacture, Logistics, System Analysis and

Data and Object Modeling, Knowledge -based Expert System application,

Enterprise re-engineering

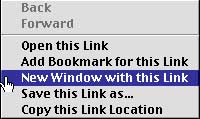

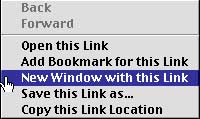

As a general idea let us suggest that when you

look up the selected website you choose "New Window with this

Link..." in your browser by clicking with the right-hand-side

mouse button (PC) or by keeping the mouse button pressed down (on the

Mac) immediately after you have clicked on the hyperlink. This way

when you wish to "hop back" to the www.cimwareukandusa.com site you

can do it in one step!

Introduction And

Requirements Analysis

Go to Top

Like other motor vehicle manufacturers, the prevailing economic

conditions and the subsequent pressures from competitors encroaching

upon its market niche, has forced Rolls-Royce Motor Cars to

re-evaluate all of its activities associated with the design,

manufacture and support of its products ([1] to [3]

and [8] and [9]). Even more so than ever, the company

has to compete on such factors as cost of operations, quality of

products and timeliness of manufacture/delivery to maintain the

uniqueness of its business.

To attain these objectives, the many diverse company functions had

to act in unison towards the same goals. Consequently, Rolls-Royce

Motor cars had to develop its own interpretation of lean/agile

production. In particular the traditional approach to engineering,

manufacturing and after sales support for new and existing products

by sequential methods was challenged through an evolving process of

concurrent or simultaneous or parallel processing, as it is called in

the company.

Figure 1 attempts to summarize

the key issues of the old sequential engineering method. Figure

2 illustrates the altarnative, concurrent or parallel

approach. Figure 3

describes some of the above outlined core activities in the form of a

simplified data flow diagram. As it can be seen, both the existing as

well as the new product development activities are shown in this

figure, integrated by a framework architecture, referred to by Rolls

Royce as Product Development & Solutions Management. Figure

4 is a schematic, relating to Figure

3 , showing in a simplified format what Rolls-Royce Motor

Cars business data store is composed of.

The parallel engineering approach dictates the evaluation and

inclusion of all the multiple and competing factors in the process of

product design, manufacture and after sales support. In order to

understand the "as is" system and then to model the "new, to be

system" a research methodology and toolset was created and validated

by the authors ([1], [4], [10], [12],

[13] and [15]).

In order to construct a comprehensive research methods toolset we

had to identify the strategic

issues, rely on management's support, learn and apply business

process re-engineering methods and practices. Furthermore we

needed the ability to integrate the specific processes of interest

through object modeling.

To that end, the premise was, that the research methods toolset

should give access and use to the following types of

modeling:

- Data modeling (e.g. through ERD, Entity Relationship Diagrams

and modeling)

- Process modeling (through IDEF-PA, DFD, CIMpgr or other

methods including the creation and evaluation of process

descriptions and data dictionaries)

- Simulation modeling (through discrete event and graphic

modeling to study the dynamic response of the system)

- "Intelligent" decision modeling (using rule based expert

systems) and through

- Mental modeling (by means of human conceptualization and

reasoning)

Through data modeling, process modeling, some simulation work and

software development done with knowledge based expert systems and

object oriented database management systems, we have identified and

reviewed our initial perception of the different and competing

factors and events that influence the parallel processing activities

of the manufacturing cells/ zones, the Current Model Development Team

and the Operations Engineering Group within Rolls-Royce Motor

Cars.

Then, as the next step we have started to capture the knowledge

from the above discussed activities and modeled it through the object

orientated research methods toolset, that we have created as part of

this research project. This assisted us in defining what had to be

done in parallel and what sequentially. This systematic activity

included the establishment of objects and classes. As the basic

method we have taken physical objects and data information objects

together and looked at their interaction to form knowledge objects.

([ 4], [5], [11], [12], [15]

and [17]).

Furthermore we were using various simulation techniques to provide

the predictive and additional dynamic perspective to the research

methods toolset. Simulation within our model and methodology gave the

time domain reasoning facility that broadened the scope of the

knowledge based system.

By using object orientation, data and process modeling and

simulation together, we could define the key attributes for

consistency of process decomposition to ensure that they maintain the

same levels of homogeneous "abstractness" for effective object

classification.

Associated with this activity was the identification of what was a

structured piece of data or information (i.e. "data about data") and

what was unstructured data or information. As part of the process we

could also identify the transition of attributes as the decomposition

process continued across consistent levels of abstraction

([18] to [22]).

In order for the reader to understand the breath and the depth of

our research task, Figure 5

illustrates the key data flow model of the model year project

development team's key processes (reflecting parallel engineering as

well as the related and integrated logistics requirements and

processes). Furthermore, Figure

6, Figure

7 and Figure 8

show the principal participants in the project development process,

from the design and manufacturing point of views, as well as their

interactions summarizing the key processes, and their owners, we had

to interact with both in terms of engineering as well as logistic

decisions in this very exciting research project. Figure

9 explains the way production planning and control data flows

were designed to cope with the changes introduced by concurrent

engineering.

The Sequence,

Interaction & Application Of The Research Methods Tool

Set

Go to Top

The purpose of this section is to illustrate, in a research case

study- oriented style, the sequence of evolution, exchanges &

application of the Research

Methods Tool Set elements conducted and validated successfully

throughout the research program.

- Firstly the specific goals, objectives & expectations of

the Research Methods Tool Set were confirmed in order to satisfy

the Model Year Team Research Program requirements. This was based

on the pre-defined attributes required for high quality academic

research and RRMC (Rolls-Royce Motor Cars) Business

acceptance.

- Secondly, the core Object Oriented Modeling activities within

the Research Program were undertaken mostly focusing on Data,

Process & Object Modeling. These activities represented the

abstract activities undertaken before any initial software was

written or subsequently modified as the Model Year program

proceeded. They were conducted primarily to attain the core

framework and structure of the entire program.

- Thirdly, application software was created (mostly in the form

of system analysis and modeling, simulation, knowledge based

expert system and its rules and object oriented distributed

databases) based on the results from the aforementioned modeling

techniques. The software was the prime medium that the Model Year

Team could directly use to manage the agreed Product Data &

Development activities within a Model Year Development Program.

Again, the sequence for delivering the Software reflected the core

Academic & Business objectives planned at the beginning of the

project.

As Figure 10

illustrates, the above activities proven to be not a simple

sequential processes whereby each phase was concluded with no

recourse to any preceding phases.

Experience showed that this had to be an iterative exercise,

ensuring that the output from any single modeling activity was

reflected within the other members of the Research Methods Tool Set.

This was true for all Modeling Techniques or Application Software

developed within this research program.

Research

Methods Tool Set Requirements

Go to Top

For both the purposes of the academic research objectives and

hypotheses, as well as for the key business objectives expected to be

fulfilled, it was necessary to translate the requirements into the

detailed, demonstrable deliverables.

The key requirements set and achieved were as follows:

- Top management support

- All users involved in the design and implementation

processes

- Resistance to change overcome

- Project champion appointed and recognized by all involved

- Project relationships and communication with its customers/

suppliers happened and was managed successfully

- Project teams recognized that the research and development

phase was just the start and that the project had to be carried

forward to the implementation and ongoing support phases and had

to interact and integrate with other projects at later

stages.

Each of the objectives listed above, and their subsequent

deliverables, had to be equally usable across the different phases of

the project. Generic definitions had to be identified and agreed so

that, for example, the tools and processes for BOM (Bill of Material

file) Management in the verification process phase could be employed

in any of the other phases, e.g. Job 1 (when the first automobile

rolls of the assembly line).

Any data or objects created for the research project had to have a

prime owner, created once but used by many, many times. For example,

if a part was created in one process then it had to be known to

others in the suite of processes. Its characteristic's and attributes

had to be also available for other processes so that duplications and

re-interpretation was eliminated.

A mechanism was needed that enabled the creation of Parts &

Bills Of Material data independently of the formal Product Change

processes within the Product Support Group. This dictated a

process that would give the Product Development staff the independent

ability to manage their own data yet when necessary, seamlessly pass

it to those remaining company processes not fully integrated within

the Model Year Operation.

For the Model Year Team, this required the continued reinforcement

of the Part Number as the prime focus of activity. The Research

Methods Tool Set had to give the Product Developers the tools

to develop products but not abdicate the responsibility of providing

support processes with the Part Number focused data that they

needed.

The Part Number had to become more meaningful through some form of

Coding and Classification as well as associating any key supporting

data or objects directly it. This became the focus for Parts Index

Numbers, Inventory Types etc. as well as our Knowledge Based Expert

System, that integrated with the core design activities, advised

designers on part number creation and allocation. (This became one of

the novel features of our research work).

To interact seamlessly with existing company processes and systems

the Research Method Tool Set had to be fully understood and its

system integration requirements considered by other systems, most

importantly the new logistics system in the company. For example,

MASCOT was the prime Logistics process and system in Rolls-Royce.

Consequently, the software had to consider its requirements for

formatting a Part Number as it was the prime source of Part

Data used by the Product Support Group when undertaking

Current Model Engineering. Where necessary, given the

constraints of Software & Process Development budgets, timescales

and capabilities, different scenarios for integrating the two systems

had to be explored and validated ([13]).

For concurrent data development by individuals within the

Project Team and others within the non- team based company

processes, the Prime Authorship enabled through Authorship Control

was required. Both of these principles had to be introduced to the

Part Objects and Bill of Material Object relationships. The

characteristics of Rolls-Royce Motor Cars Parallel Processing had to

give other interested parties an early indication of the Part Master

& Bill Of Material data, processes and objects whilst still

retaining control with those authors accountable for its accuracy

& effectiveness.

Although the Project Team personnel may have initiated the data,

there were various points in time when the responsibility for this

transferred to others either within the team or outside of it. The

tools had to reflect the multi-source nature of the key data /

objects and any data/ objects that were dependent upon it. One of the

most important challenges was the fact, that the Research Methods

Tool Set had to be constructed and operated in such a way, that it

reflected the evolutionary nature of any Data, Processes &

Objects within the Project.

To the Product Engineers each iterated Part Entity was

simply an evolution based upon the original specification. However,

the Logistics function viewed each of these iterations as separate

entities requiring distinct processes to manage them. Within the "as

is model" scenario, there was no method of distinguishing between

these different entities. Therefore it was an objective of the

Research Methods Tool Set to provide this capability in the "to be

system" model, whilst at the same time not constraining the required

flexibility that the Project Team needed to give other functions of

modified components earlier visibility.

It was an objective of the Research Program to model a mechanism

that provided effective Revision Level Management for both the

data related aspects of the part number and the physical entities

themselves. The tools and objects had to ensure that all members in

the process had their requirements fulfilled by considering the

multiple abstractions of the same object.

In the "as is system" no holistic or consistent method existed for

managing any task related activities for part supply. The only

processes were department based and tended to reinforce the

sequential nature of processing a parts design & supply.

It was an objective of the research to provide a mechanism that

reflected the key events in the complete / entire Part Supply

logistics cycle. It had to embody the data associated with each

discrete activity. For example, Part Level Timing based on a

wide ranging spectrum of Business Activities not just Engineering

events. Where necessary, the delivered tool had to allow the Business

Events to occur concurrently not sequentially.

Given that non Project Team based activities were included in the

Part Supply Processes, then the tool needed to cross any IT

(Information Technology) and process boundaries. Typically, this

included the "Systems" used by Logistics, Manufacturing , Purchasing

etc. as they all strove to enforce their own timing mechanism &

principles onto the process. The integration had to be both seamless

and automatic to avoid any resistance to change.

To overcome these limitations, a common key had to be found

between them and exploited to overcome any sequential or

re-interpretation of processes. The same is also true of "terms &

definitions" of meanings. A glossary of common definitions had to be

established in the data dictionary.

The existing Parts Project Timing utilities did not recognize any

of the characteristics of a part that would consequently effect the

processes that were managed to provide them. Based on a form of

"Coding & Classification" of parts, it was an objective of

the research to introduce a process that ensured that only the

correct "Business Activities" were attracted to a part, thus

eliminating any duplicated or wasted effort. This approach required

all participants to agree upon the definition of a parts

characteristics and the mandatory Business Timing events associated

within each.

The developed tool had to ensure that the Forwards Scheduling

Timing Philosophy adopted by the Project Team to develop

components, was compatible and workable with those remaining

functions beliefs & methods of operation. Invariably, these other

functions used Backwards Scheduling of parts to meet a specified

Product Implementation Date.

Any costing of Model Year Features was based upon the BOM

Structures created as the project evolved using financial details

rolled up level by level. Unfortunately, Bills Of Material were

traditionally the last item to be checked and usually consisted of

'References Number' relationships giving no indication of the

component object or its attributes.

Only the Description gave the ability to define its

appropriateness and this was not part of the key. Even with a

complete Bill Of Material structure, the perspective given to it was

'manufacturing' so it was not wholly effective for establishing the

true costs. Further, the discrete modules in the bill had to be

replicated many times in order to cover all of the Model / Market

combinations under review.

It was an objective of the research to provide a mechanism that

gave costing information earlier and on a more generic or

representative basis. It had to be disassociated from the Bill Of

Material dependency.

During the Product Development Cycle, a mechanism had to be

provided that enabled "Concerns" to be registered and managed. What

existed was fragmented & duplicated into Engineering Concerns,

Manufacturing Concerns, Owner Concerns etc., with no consistent or

common basis of management between them.

In the "as is model" no process existed for the management of

Jigs, Tools & Fixtures. It was an objective of the

research to provide such a facility and to ensure that it interacted

with any Part Level Timing processes.

Overall, the Research Program and the development of the

Research Methods Tool

Set had to conform to the following:

- Be developed with the full understanding of the Feature Group

Leaders so that it challenged the existing roles & tools that

they would have naturally employed.

- Remove the reliance on traditional Departmental Operating

Procedures so that it helped the Feature Group Leaders/ Feature

Owners by being an enabler not a burden.

- Remove the reliance on clerical / manual processes

traditionally conducted by "clerks". These types of resources were

not available due to the sweeping manpower reductions in the

company. The Research Methods Tool Set had to empower Project Team

Members, not reinforce their dependency on bureaucratic

activities.

- It is important to recognize that in order to define &

relate to the requirements of the Project Team, we had to spend

approximately three months formulating & refining the above

Business & Academic Research Objectives. This was after

understanding and modeling the "as is system" with a new

methodology (to be published at a later stage).

The requirements were expressed in the form of "Process or

Functional Requirements" reviewed by both the Project Team / Key

Stakeholders and the non Project Team dependent Process Owners.

They were publicly reviewed and critiqued at the 1996 Model Year

Concept Review event within the company. This was a formal Go/ No Go

decision point when all participants could raise any Concern and

expect a formal structured answer to their query. The event confirmed

that the objectives were acceptable and the "go ahead" was

granted.

Modeling

Introduction

Go to Top

The modeling phase represents the initial and prime academic focus

for the Research Program. It had itself three distinct attributes

underpinning the fulfillment of the aforementioned objectives.

Overall, the modeling phase needed to accomplish the following:

1. Model the abstract Data, Processes &

Objects used within a typical Project Phase. Most importantly,

this required an acceptance of common definitions for Data &

Objects; especially what they represented and conveyed to the

participants. They had to be applied with as much concurrency as

possible in the Product Life Cycle.

2. Ensure that sufficient compatibility existed between

the Research Methods Tool Set elements to provide an iterative

development capability. For example, although Process Modeling

initiated the Object Modeling phase, sufficient was learnt from

reviewing the objects, their structures and the events that

transformed them.

This prompted re-analysis within Process Modeling to further

understand the classification of the objects. The key issue was to

ensure that the output from one tool was of relevance and

compatible to the input requirements of another.

3. Provide sufficient details from which the Real World

Business Processes & Application Software could be created

giving the Project Team any tools and activities they needed to

develop the Model Year Features by Parallel Processing.

In reference to the above, or extensive Figure 5 shows that to

fulfill the research objectives, the basic sequence had to be as

follows:

1. An initial Top Down identification of the data

within the domain under review.

2. The identification & modeling of Processes within

the Domain acting upon the data

3. Building upon the first two steps, a Bottom Up

definition & modeling of the Objects which exist in the

problem domain. These were either derived from the initial Data

Modeling or from the Inputs, Outputs, Controls & Resources

upon the identified Process Models.

Initially, each of these modeling techniques focused on its own

particular aspect or strength, paying minimal attention to the

requirements of others in the Research Methods Tool Set. Only with

subsequent iterations after the initial definition were the

requirements of adjacent neighbors considered thus giving greater

prominence to integration.

For example, viewing both the non physical data & physical

real world items as objects and how they interrelate. This occurred

not only across the output from each technique but also by

considering the different philosophies they embodied and operated.

For example, starting with a top down methodology, initiating a

bottom up focused sequence of events and then merging the two

together through incremental and iterative steps to provide a

consistent & uniform definition across all of the modeling

techniques ([7]).

As Figure 5 depicts, these iterations were conducted with constant

reference to the initial research objectives. This re-checking of

results against requirements provided a useful technique for raising

the awareness of the Project Team Members and minimizing any

resistance to change. With the author providing a focus for modeling

activities, this reduced to a minimum any modeling inconsistencies

typically found when numerous domain experts & modellers from

different backgrounds work together.

The iterative modeling exercise could have continued indefinitely

so we have employed some guidelines to define when to initiate

application software development and when to conclude Phase 2.

When the first Data & Modeling exercises were completed, then

the first cut Logical Entity Diagrams and Process Models were

used to start the development of the Application Software.

The Logical Entity Diagrams were a familiar tool used by the

Software Developers but the Process Diagrams, their

intent and key messages, were communicated to the Project Team

members. The Process Models indicated the key objects used

within the Project and gave an elementary view of their internal

structure. It was recognized that the Application Software would have

to support the creation, population & management of these key

objects.

From the above two sources, the Object Model was created

based upon the lowest level Process Model. Again, anything learnt

from this particular step was included in the Application Software

Design. This provided a clearer definition of the types of data

objects that any software was required to manage and a clearer

understanding of their internal structure.

Within this step, Object Modeling also helped to identify the

missing Processes that were assumed or hidden within the Process

Model. Iterative Object Modeling & Process Modeling continued,

continually revealing the Processes, Objects and their appropriate

structures.

Process modeling down to the 4th level of decomposition proved to

be the key to identifying all of the processes within the modeled

project phase. During this phase we also identified the key internal

structures of the objects, ensuring that the object modeling results

consistently reflected all associations between Rules / Hypotheses

& Objects. A comprehensive Process Model signified a

formal end to Phase 2.

Data

Modeling

Go to Top

Having confirmed the objectives, Data Modeling was

conducted by either the authors or the Software Modellers. The remit

was to identify key data entities and relationships necessary to

support and attain the above business & academic functions.

The knowledge was captured in the IDMS Architect product in the

form of Data Flows, Data Elements or Data

Inventory, the Entities associated with these and the

relationships expressed between the entities.

Data Modeling also provided an elementary understanding of the

Processes needed to maintain these Data Objects. These were expressed

in the form of Functional Requirements. As such it was a static

representation of the domain giving only a minimal understanding of

the dynamic interaction of the entities in terms of, say, the

chronological evolution of the values associated with each of the

data elements.

All of the knowledge captured during the first Data Modeling

exercise was expressed as Logical Data Structures/ Models with their

Functional Descriptions. They were used to confirm our initial

understanding with the domain experts. From that perspective it

provided a very useful and stable basis from which to proceed to

develop application software.

Although this was a necessary first step, experience and use

proved that the required degree of decomposition and clarity of

objects ( both data and physical ) and their internal structures,

could only be provided by Process & Object Modeling. The

usefulness of the output from this initial Data Modeling step, even

later in the iterative stages, diminished as the research continued

and the emphasis turned to a wider Object Oriented approach.

However, using this initial Logical Data Structure, the concept of

Classification was applied to generate an initial Object Orientated

perspective to the resultant Data objects.

This deliverable initiated the basis of work from which to fulfill

the research objectives. Grouping or categorizing the data elements

caused us and Domain Experts to consider the perceived internal

structure of the Objects. As this continued, so more effort was

expended to keep the Data Objects derived from this technique

complementary to those from Process Modeling.

The prime driver was ensuring consistency of approach &

results between the two modeling techniques.

Process

Modeling

Go to Top

Building upon the outline Process definitions from Data

Modeling, a Top Down Process Modeling exercise was conducted

by us using our IDEF_PA methodology (to be published at a later stage

in detail). The focus of attention was to define the generic

processes used within the project development phases.

Starting from the top level process, the modeling exercise

decomposed down through the levels until it was felt that sufficient

structural detail of key objects was uncovered.

For example, the modeling exercise identified the need for an

Addendum Sheet within the Project Team and to then communicate to

outside bodies the Parts 'Engineering Specification. The Logical Data

Structures and the Bill Of Material function Data Flow Diagrams

provided a guide to us, ensuring that the entities and events were

included within the resultant process models.

Unlike the Data Modeling exercise, Process Modeling revealed the

Physical Objects that could be encountered or created at any

point in time and how they related to the associated Data Objects

invariably used to control or manage their physical

manifestation.

The initial results from the Process Modeling exercise was the

first draft IDEF_PA diagrams and the Data Dictionary / Physical

Object Library descriptions. These gave more detail and a

consistent definition, albeit elementary, to the structure of the

Data & Physical objects.

The Process Models further outlined those activities that would be

dependent upon IT (Information technology) based support and was

consequently used by the Software Development Group to initiate

further design activities. This was done under the direct management

of the first Author.

The Data Dictionary helped to provide a Logical definition of the

data being managed which complemented the Data Modeling design

activities started earlier.

Those processes to be undertaken by purely manual means could be

brought to the attention of the Feature Group Leaders & Feature

Owners to gain their consent to the philosophy. With both types of

processes upon the Process Model, they were able to comprehend what

was being proposed and anticipated what could be done in parallel and

what couldn't.

The decomposition process was of little interest to them. They

were only interested in what was expected of them. The definition of

compatibility between Modeling techniques was solely within the remit

of the Research Program.

Having established the basic Processes and the structure of the

Objects (Data or Physical ) within them, these static perspectives

formed the basis to initiate any definitions within the Dynamic

Modeling, Object Orientated Tool.

It quickly became apparent that the Process Model only had to go

to the 4th level of iteration. The Feature Group Leaders &

Feature Owners defined this as the most appropriate level. Level 3

only indicted the key milestones in a parts life cycle whereas level

5 was considered to be to much detail to comprehend, absorb, retain

& recall.

Object and

Rules Modeling

Go to Top

Object Modeling was conducted solely by us and gave an added

dimension to the Research Methods Tool Set. All of the preceding

modeling iterations provided a Static perspective of the Processes

& Objects. This gave a chronological view of the objects and

their structures showing how they were either created, modified or

destroyed as the model ran.

Using the first Process Models and the supporting Data

Dictionaries / Physical Objects Libraries, the initial static

definitions of classes for the objects were recreated in our

Knowledge Based Expert System, NEXPERT. Then by following the

Process Models events, the NEXPERT Rules & Hypotheses were

created. Initially they had no dependencies specified within them. No

internal Input Tests or Output Actions were specified.

Only for those agreed level 4 Processes exhibiting some degree of

explicit Data & Object Structures / Classes (from the Data

Dictionaries & the Physical Object Libraries) were the first

Conditions & Actions Statements created. These statements

utilized the Classes definitions from Process Modeling which

corresponded to the Controls upon the IDEF_PA diagrams.

Constructing the Object Model followed the sequence of proposed

Process events from the Process Models. Where the Process Model was

ill defined (e.g. due to the first iteration of it) then the

corresponding Rule Structure of Conditions & Actions had to be

left incomplete.

The initial Object Modeling exercise also provided a stimulus to

consider the hierarchy needs within the Process Models. Although not

requiring Parent Classes, the classes under review had to have

superclasses if consistency of definition was to be proved.

Having established the Static Model within NEXPERT, so the

dynamic processes were conducted based upon the incomplete

model. It soon became apparent that the Object Model would give a

chronological view of the Data Values showing how the initial

structures and values were populated / modified as NEXPERT conducted

an inference session.

For example, the tool showed how the authorship value had to

change as the part progressed through the various events in its

creation, association into a Bill of Material, transfer to MASCOT for

Order Requirements planning and then buy off and acceptance by the

Product Support Group processes.

Again, the information derived from this initial process

established the dynamic values for the data objects that the

application software would have to include. This initial phase

concluded when an Object Model was constructed for the corresponding

Process Model as it existed from that first initial attempt.

Iterative

Modeling

Go to Top

The initial object modeling exercises were based upon the

knowledge derived from the first Process Modeling iteration. Having

recognized this as a starting point for the complete Research Methods

Tool Set, then the results were fed back into the Process

Modeling.

The process of expanding both Object & Process models

continued until each had a complete, corresponding &

comprehensive definition of Processes (or Rules/ Hypotheses), and

Data Dictionaries / Physical Object Libraries (or Classes or

Objects that were the instances of these classes ) at the 4th level

of modeling decomposition.

As we can see in Figure 5 the prime conduit for feedback was to

the Process Models not the Data Models. The data models were only

useful to initiate the process for soon afterwards the evolution of

the Process Definitions and the Object Classes provided more

comprehensive definitions.

It was clear that Object Models were mandatory to:

1. the definition of data and objects

2. the definition of how objects moved through the

system;

3. whilst the Process Models confirmed the structures,

and

4. decided which processes would be IT (Information

Technology) based and which not.

Application

Software Development Activities

Go to Top

The application software was the real world implementation,

supporting any IT dependent by the processes that the team would use

based on the preceding Abstract modeling phase. The results from the

modeling phase were used to construct the application software

structure and, to some extent, the processing logic. This commenced

with the output from the first Data Modeling exercise and was

significantly enabled by Process Modeling.

However, there was some degree of feedback from this Business

Software phase to the modeling activities. Having the application

software in front of them to use, even though it was in a test

environment, enabled the Feature Group Leaders & Team Members to

further mental model the processes & what was required to

initiate, control or deliver what they expected.

This ability and the management

decision to develop the software itself by a process of Rapid

Application Development (i.e. through iterative cycles) brought the

Software Design processes into the "modeling arena".

For example, Process Modeling defined the IT dependent activities

and the data elements that would be transformed. Using the Software

in conjunction with the Process Models, provided a stimulus to

consider the expansion of the application software to also include

any neighboring Process requirements.

If a part number record was created by the software then the

process & object models would illustrate the data required and

the individual steps needed to compile or define the data. Reference

to the application software design showed what could be done in a

single transaction and thus not requiring devolution into smaller sub

processes as shown upon the Process & Object Models.

Also, application software prototyping provided the 'User

Friendly' stimulus to the Project team ([14], [16]

and [17]). It enabled them to speculate on the finer details

of data requirements not revealed during Data, Process & Object

Modeling. This was especially relevant when the initial concatenation

of data to form meaningful Codes & Classifications were outside

the immediate capabilities of the Process Models.

During the Data, Process & Object Modeling phases, the results

would have only stated that a particular activity had to be

performed. The Process Models were used to define whether they were

IT or non IT dependent. Only by conducting application software

development and including such aspects as Data Volumes was the 'type'

of IT processing considered. These factors were not prompted or

included in the Process or Object Modeling phases.

For example, creation of a Part was an on-line, real- time

requirement. This was mandatory and non -negotiable in order for the

team to act quickly. However, many other factors such as data

complexity, numbers of records etc. were only considered during Data

Modeling and the speculative Software Design phases.

These factors influenced whether data creation or transfer could

be conducted in 'Batch' or as a lower priority 'On -line' task. The

Process & Object Modeling phases did not include these

considerations.

Conclusions and

Summary

Go to Top

In this research case study oriented paper we have attempted to

introduce how the various elements of the Research

Methods Tool Set (as shown in Figure 10) were used in

an iterative manner to model the Data, Processes & Objects in a

Model Year Program Phase at Rolls-Royce Motor Cars as a result of a

major enterprise re-engineering process. We have shown how each

element was used in parallel to provide both an overall and an

individual perspective for each of the various techniques

employed.

Having defined the process and the objects to the 4th level of

decomposition, the Application Software activities were accelerated.

It was felt that this gave the correct and appropriate level of

knowledge from which to proceed with minimum risk.

It was believed that the Modeling phase, through a process of

communal design, would be the prime stimulus for "mental modeling".

However, we learnt that this phase was significantly enhanced and

accelerated by the provision of Application Software, in a controlled

test environment, prior to its general release. In this managed

environment, the end users were able to build upon the Process &

Object Models to test their own understanding of how the activities

and events would work together. This exercise proved to be a fruitful

source of refined knowledge.

Significant effort was expended by us in defining a set of

resultant models that operated at the same consistent level of

decomposition or abstraction. The models that were produced could be

related to each other making comprehensive & understanding that

much more easier. Further, the use of a Data Dictionary in IDEF_PA

and the consequent population of Classes in NEXPERT provided a

mechanism to define an element once (Data or Physical ) and to show

the dependent processes that acted upon it. This enabled us to

further identify those processes conducted serially and to challenge

why they could not be started either earlier or in parallel with

others.

It should be noted, that the currently available and validated

modules and already integrated methodologies of the research toolset

are in use in the company and are successfully applied to the

development of processes for new model designs and developments.

Acknowledgments

The authors would like to express their appreciation to

Rolls-Royce Motor Cars Limited, Crewe, the University of East London,

UK, Nexpert and NJIT (New Jersey Institute of Technology), New

Jersey, USA, for their continuous support of this research

project.

References

Go to Top

[1] Ashton, P.T and Ranky, P G: A methodology for

analysing concurrent engineering and manufacturing processes at

Rolls-Royce Motor Cars Limited, International IEEE CIM Conference

proceedings, Singapore, 1993.

[2] Bisgard, S.: A conceptual framework for the use of

quality concepts and statistical methods in product design. Journal

of Engineering Design. Vol 3(2), 1992 p. 117-133.

[3] Barghouti, N. and Kaiser, G.: Modelling concurrency in

rule based development environments. IEEE Expert. p. 15-27, 1990

[4] Booch, G.: Object Oriented Design with Applications.

Benjamin Cumminggs, 1990.

[5] Kumar, S and Gupta, Y: Cross functional teams improve

manufacturing at Motorolas Austin Plant. Industrial Engineering, p

32-36, May 1991.

[6] Lu, S.C.Y Annual Report. Knowledge based engineering

systems laboratory annual report 1992. University of Illinois at

Urbana-Champaign.

[7] McIntosh, K: Engineering Data Management, Engineering.

p 21-23 April 1992.

[8] Ranky, P G: "Manufacturing

Database Management and Knowledge Based Expert Systems" CIMware

Ltd., ISBN 1 872631-03-7, 240 p

[9] Ranky, P G: "Flexible

Manufacturing Cells and Systems in CIM", CIMware Ltd., ISBN

1-872631 02-9, 264 p.

[10] Ranky, P G: "Concurrent

/Simultaneous Engineering Methods, Tools and Case Studies"

CIMware Ltd., ISBN1-972631-04-5, 264p.

[11] Wu, B: WIP cost related effectiveness measure for the

application of an IBE to simulation analysis. Computer Integrated

Manufacturing Systems. Vol 3 (3), p 141-149, 1990.

[12] Ashton, P. T and Ranky, P G: The Methodology and

Research Toolset for Analyzing and Implementing Parallel Engineering

Processes at Rolls-Royce Motor Cars Ltd., Flexible Automation 1996,

Proceedings of the Japan/USA Symposium on Flexible Automation, IEEE,

ASME, Boston, July 1996. p. 691-696.

[13] Ashton P T and Ranky P G: The Research, Development,

Validation and Application of an Advanced Concurrent (Parallel)

Engineering Research Toolset at Rolls-Royce Motor Cars Limited, ETFA

'97, The 6th International IEEE Conference on Emerging Technologies

and Factory Automation, Los Angeles, September 9-12, 1997.

Proceedings

[14] Ranky, P G, Ranky M F, Flaherty, M, Sands, S and

Stratful, S: Servo Pneumatic

Positioning, An Interactive Multimedia Presentation on CD-ROM

(650 Mbytes), March 1996, CIMware Ltd., www.cimwareukandusa.com

[15] Ranky, P G: An

Introduction to Concurrent/ Simultaneous Engineering, An Interactive

Multimedia Presentation on CD-ROM with off-line Internet support

(650 Mbytes), 1996, CIMware Ltd., www.cimwareukandusa.com

[16] Ranky, P G: An

Introduction to Flexible Manufacturing, Automation and Assembly, An

Interactive Multimedia Presentation on CD-ROM with off-line

Internet support (650 Mbytes), 1996, CIMware Ltd.,

www.cimwareukandusa.com

[17] Ranky, P G: An

Introduction to Total Quality and the ISO9001 Standard, An

Interactive Multimedia Presentation on CD-ROM with off-line Internet

support (650 Mbytes), 1997, CIMware Ltd., www.cimwareukandusa.com

[18] Thornton, A C: The use of constraint-based design

knowledge to improve the search for feasible designs, Engineering

Applications of Artificial Intelligence, 1996, 9(4) 393 - 402

[19] Mayer, R J et. al.: The auto-generation of analysis

models from product definition, International Journal of

Manufacturing Technology, 1996 12(3) 197-207.

[20] Trappey, A J C, Peng, T K et al: An object oriented

bill of materials system for dynamic product management, Journal of

Intelligent Manufacturing, Oct 1996 7(5) 365-371.

[21] Potter, C D: CAD/CAM special report: PDM: reports on

work in progress, Computer Graphics World, Sep 1996 19(9)

[22] Malhotra, M K, Grover, V et al: Re-engineering the

new product development process: a framework for innovation and

flexibility in high technology firms, Omega, 1966 24(4) 425-441.

Go to Top